The Father of Information

In 1948, Claude Shannon published "A Mathematical Theory of Communication," giving birth to information theory. His key insight: information is that which reduces uncertainty.

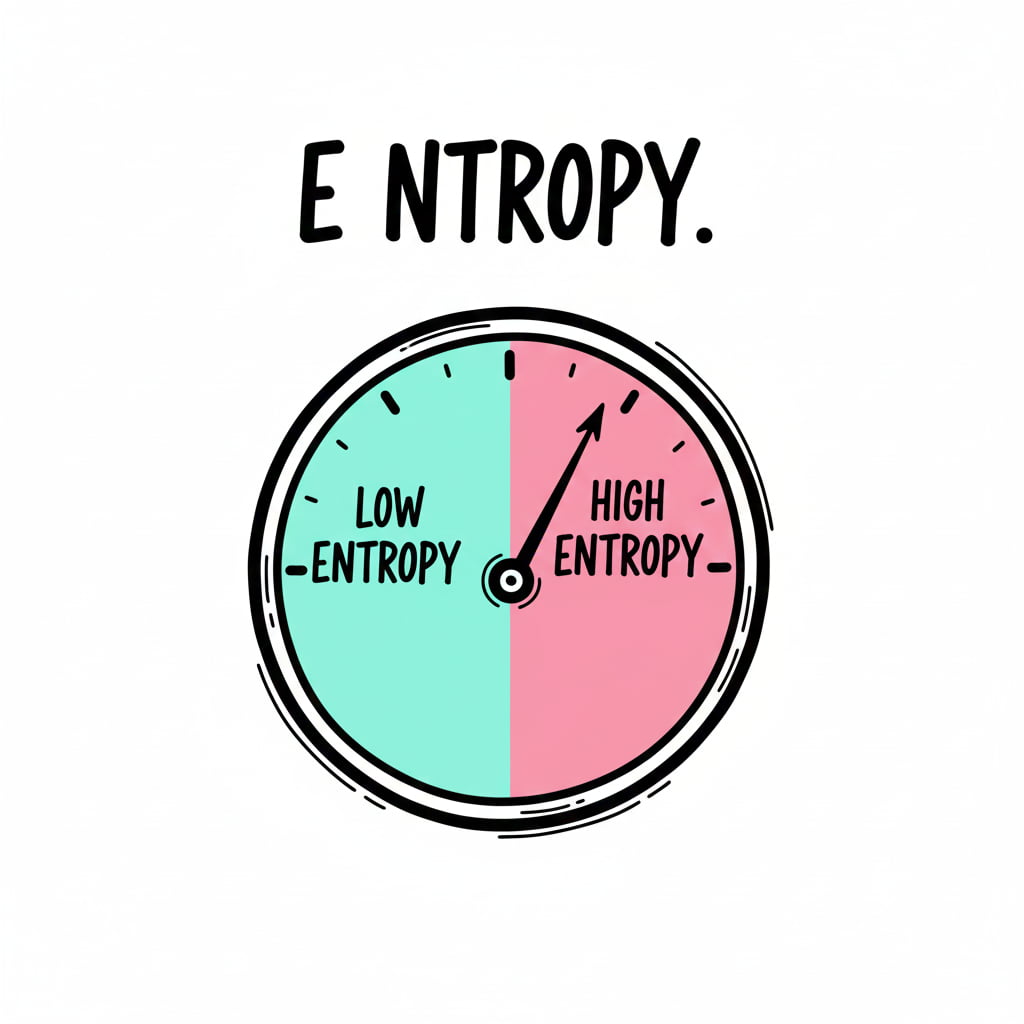

Shannon quantified uncertainty with a formula he called entropy—the same term physicists use to describe disorder. High entropy means high uncertainty. Low entropy means predictability.

This simple insight has profound implications for how we think about automation, learning, and human decision-making.