To understand where we're going, we must understand where we've been. The web has evolved through distinct phases, each defined by a different relationship between users and data.

The Architecture of the Verifiable Web

Infrastructure for Trusted AI

Infrastructure for Trusted AI

As AI agents become more autonomous, they face a fundamental problem: how do they know what to trust? The current web was built for humans to read and interpret. The next web may need to be built for machines to verify.

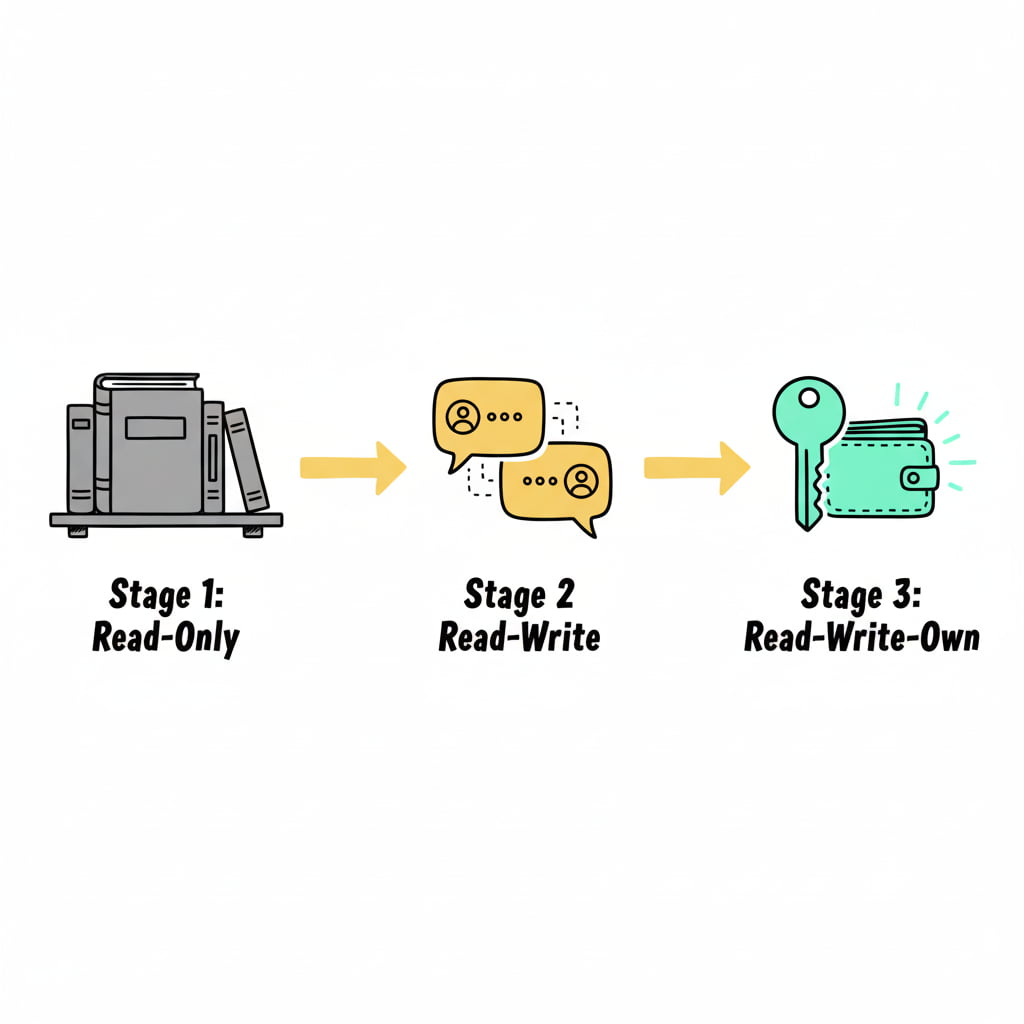

To understand where we're going, we must understand where we've been. The web has evolved through distinct phases, each defined by a different relationship between users and data.

"Read-Only"

The Library. Static pages published by a few, consumed by many. Information flows one way.

"Read-Write"

The Platform. Users create content, but platforms own the data. Value accrues to intermediaries.

"Read-Write-Own"

The Cooperative. Assets held via cryptographic keys. Ownership becomes native to the protocol.

To enable a verifiable web, we need a paradox: public verifiability without compromising private visibility. We achieve this through the "Glass House with Curtains" framework.

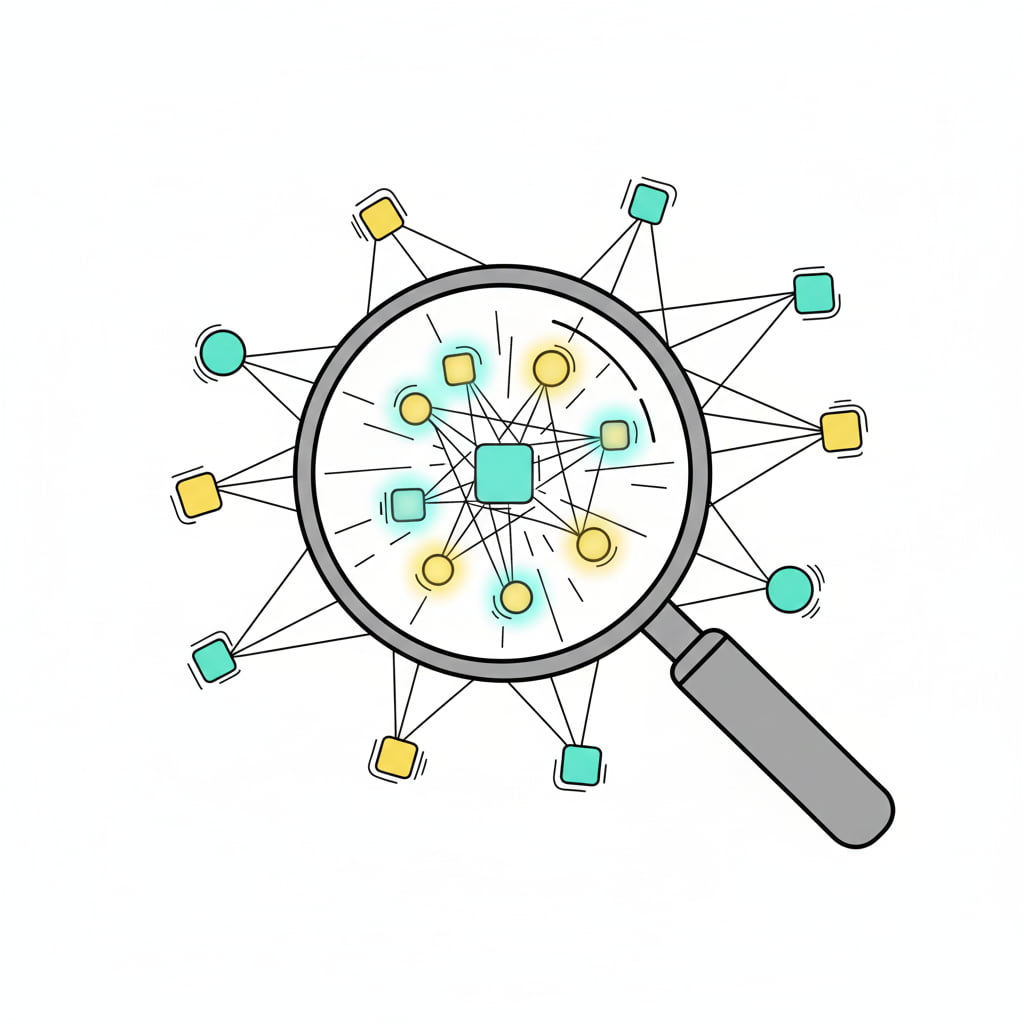

As AI becomes an increasingly important consumer of web data, focus is shifting from raw data retrieval to Semantic Discovery.

Emerging platforms like OriginTrail are exploring ways to organize data into searchable, linked graphs. This could allow users—and AI agents—to search for Abstractions: querying by concept, entity, or relationship alongside traditional keywords.

Through protocols like the Model Context Protocol (MCP), AI models could "pull" these enriched abstractions in real-time, finding verified knowledge without needing to index entire networks.

By analyzing metadata within a Decentralized Knowledge Graph, an AI Agent could potentially calculate the entropy of a specific knowledge asset before deciding how to use it.

The Signal: High verification counts, signatures from trusted issuers, low rate of updates.

Potential Action: The Agent treats this as Fact and executes with confidence.

The Signal: Conflicting assertions, lack of trusted signatures, rapid volatile updates.

Potential Action: The Agent flags this as Debate and defers to human judgment.

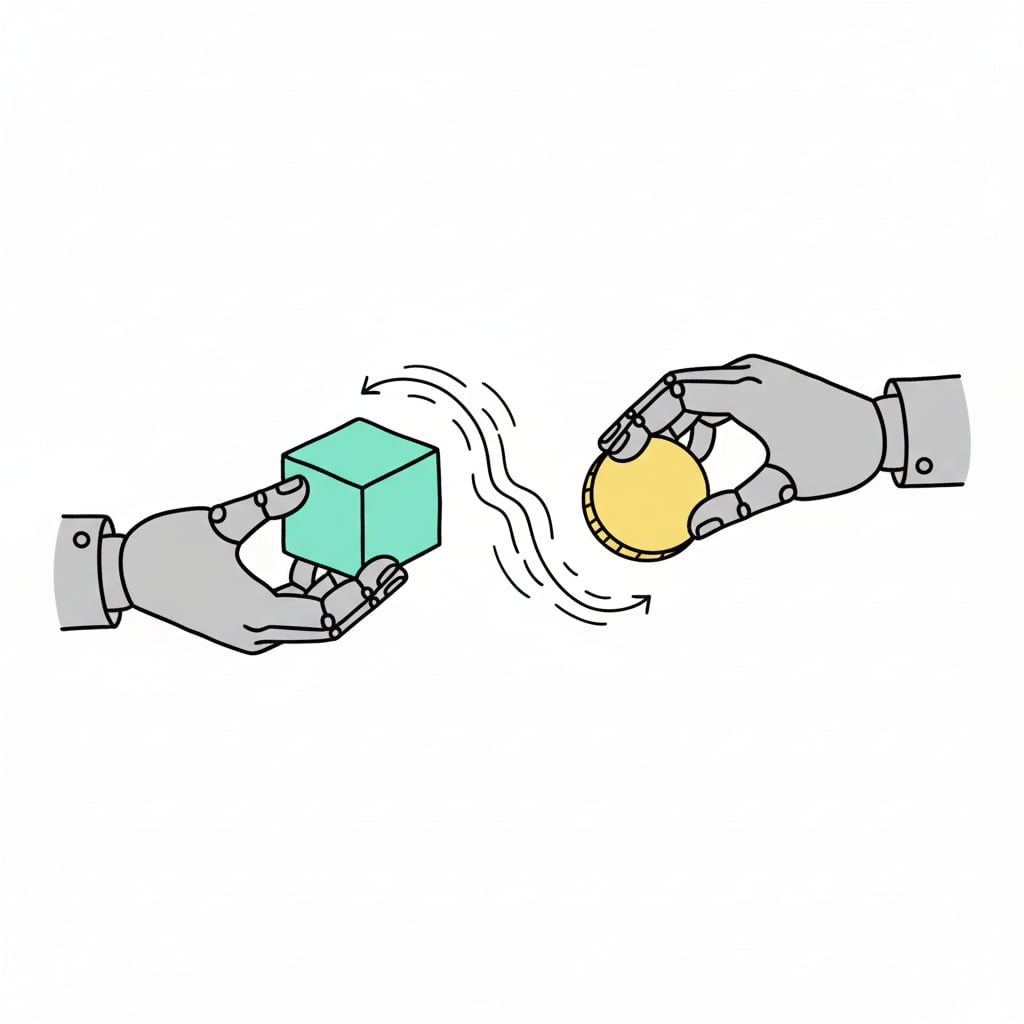

In decentralized economies, data could be treated as a high-velocity financial asset. One emerging model for converting "Knowledge" to "Currency" follows a path like this:

Rights represented as digital tokens or access credentials. Data becomes a potentially tradable, liquid asset.

Payments released automatically upon verified access. Could reduce the need for intermediary trust.

Near-instant settlement in stable digital currencies. Aims to remove price volatility from transactions.

AI Agents earning and spending tokens autonomously. An emerging frontier enabling self-funded AI services.

The vision is a future where AI agents can autonomously discover, verify, and purchase knowledge—creating an economy of information that operates at machine speed while respecting human governance.

You might ask: why is an AI consulting company writing about decentralized infrastructure and knowledge graphs?

The answer is simple: our clients need to make decisions today that will affect their position in 5-10 years. Whether or not this specific vision materialises, the underlying trends are real:

We do not claim to know which specific technologies will win. We do believe that understanding these possibilities is essential for strategic planning.

We have described a possible architecture: decentralized graphs, computable entropy, tokenized value exchange, autonomous agents. It is elegant. It is powerful. And it is insufficient.

Infrastructure provides the substrate. Humans provide the judgment.

Even in a world where AI agents can automatically distinguish "Fact" from "Debate" using on-chain verification, the most consequential domains will remain High Entropy: ethics, relationships, meaning, beauty, conflict, healing. These are the spaces where verification is impossible because the "answer" does not exist until we create it together.

The capacity to operate in these High Hc domains—where no protocol can tell you what to do—remains irreducibly human. It requires presence, intuition, nervous system regulation, and embodied awareness.

This is why, alongside our work in AI infrastructure, we invest in Somatic Intelligence. The Verifiable Web may handle our facts; we must handle ourselves.